Research

I am a Research Scientist at NVIDIA Research (My NVIDIA research page). I joined NVIDIA in 2011 after obtaining my Ph.D. degree from Grenoble University at INRIA in France (thesis document here). My research interests include real-time realistic rendering, global illumination, alternative geometric and material representations (voxel-based), ray-tracing, anti-aliasing techniques, distributed rendering, as well as out-of-core data management. My predominant research direction focuses on the use of pre-filtered geometric representations for the efficient anti-aliased rendering of detailed scenes and complex objects, as well as global illumination effects. My most impactful contributions are the GigaVoxels rendering pipeline and the GIVoxels/VXGI voxel-based indirect illumination technique, with several hardware implications in the NVIDIA Maxwell architecture.

Publications

2023 |

|

|

Luminance-Preserving and Temporally Stable Daltonization Ebelin, Pontus; Crassin, Cyril; Denes, Gyorgy; Oskarsson, Magnus; Åström, Kalle; Akenine-Möller, Tomas Eurographics 2023 Short Papers, 2023. Inproceedings @inproceedings{ECDOAA23,

title = {Luminance-Preserving and Temporally Stable Daltonization}, author = {Pontus Ebelin and Cyril Crassin and Gyorgy Denes and Magnus Oskarsson and Kalle Åström and Tomas Akenine-Möller}, url = {https://research.nvidia.com/publication/2023-05_daltonization, NVIDIA Research page https://diglib.eg.org/items/fc9b3da1-606d-40f4-808f-aa2e319f39d9, Eurographics webpage /research/publications/ECDOAA23/LuminancePreservingTemporallyStableDaltonization.pdf, Paper /research/publications/ECDOAA23/LuminancePreservingTemporallyStableDaltonizationSupp.pdf, Supplemental PDF /research/publications/ECDOAA23/LuminancePreservingTemporallyStableDaltonizationSupp.pdf, Presentation PDF /research/publications/ECDOAA23/Daltonization_EG23_Talk_01.mp4, Supplemental Video /research/publications/ECDOAA23/daltonization_transforms_original_protanopia_deuteranopia.zip, Reference transforms}, year = {2023}, date = {2023-05-10}, booktitle = {Eurographics 2023 Short Papers}, abstract = {Color vision deficiencies (CVDs), more commonly known as color blindness, are often caused by genetics and affect the cones on the retina. Approximately 4.5% of the world’s population (8% of males) has some form of CVD. Since it is hard for people with CVD to distinguish between certain colors, there might be a severe loss of information when presenting them with images as, e.g., reds and greens may be indistinguishable. We propose a novel, real-time algorithm for recoloring images to improve the experience for a color vision deficient observer. The output is temporally stable and preserves luminance, the most important visual cue. It runs in 0.2 ms per frame on a GPU.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Color vision deficiencies (CVDs), more commonly known as color blindness, are often caused by genetics and affect the cones on the retina. Approximately 4.5% of the world’s population (8% of males) has some form of CVD. Since it is hard for people with CVD to distinguish between certain colors, there might be a severe loss of information when presenting them with images as, e.g., reds and greens may be indistinguishable.

We propose a novel, real-time algorithm for recoloring images to improve the experience for a color vision deficient observer. The output is temporally stable and preserves luminance, the most important visual cue. It runs in 0.2 ms per frame on a GPU. |

2021 |

|

|

Refraction Ray Cones for Texture Level of Detail Bokskansky, Jakub; Crassin, Cyril; Akenine-Möller, Tomas Ray Tracing Gems II, Chapter 10, 2021. Book Chapter @inbook{BCA21,

title = {Refraction Ray Cones for Texture Level of Detail}, author = {Jakub Bokskansky and Cyril Crassin and Tomas Akenine-Möller}, url = {https://link.springer.com/chapter/10.1007/978-1-4842-7185-8_10, Springer webpage https://research.nvidia.com/index.php/publication/2021-08_refraction-ray-cones-texture-level-detail, NVIDIA Research page /research/publications/BCA21/RTG2_RefractionRayCones.pdf, Chapter (pdf)}, year = {2021}, date = {2021-08-04}, booktitle = {Ray Tracing Gems II}, chapter = {10}, abstract = {Texture filtering is an important implementation detail of every rendering system. Its purpose is to achieve high-quality rendering of textured surfaces, while avoiding artifacts, such as aliasing, Moire patterns, and unnecessary overblur. In this chapter, we extend the ray cone method for texture level of detail so that it also can handle refraction. Our method is suitable for current game engines and further bridges the gap between offline rendering and real-time ray tracing.}, keywords = {}, pubstate = {published}, tppubtype = {inbook} } Texture filtering is an important implementation detail of every rendering system. Its purpose is to achieve high-quality rendering of textured surfaces, while avoiding artifacts, such as aliasing, Moire patterns, and unnecessary overblur. In this chapter, we extend the ray cone method for texture level of detail so that it also can handle refraction. Our method is suitable for current game engines and further bridges the gap between offline rendering and real-time ray tracing.

|

|

Improved Shader and Texture Level of Detail Using Ray Cones Akenine-Möller, Tomas; Crassin, Cyril; Boksansky, Jakub; Belcour, Laurent; Panteleev, Alexey; Wright, Oli Journal of Computer Graphics Techniques, 2021 , 2021. Journal Article @article{ACBBPW21,

title = {Improved Shader and Texture Level of Detail Using Ray Cones}, author = {Tomas Akenine-Möller and Cyril Crassin and Jakub Boksansky and Laurent Belcour and Alexey Panteleev and Oli Wright}, url = {https://jcgt.org/published/0010/01/01/paper.pdf, Paper https://jcgt.org/published/0010/01/01/, JCGT webpage https://github.com/NVIDIAGameWorks/Falcor/blob/master/Source/Falcor/Rendering/Materials/TexLODHelpers.slang, Code in Falcor (see TexLODHelpers.slang)}, year = {2021}, date = {2021-04-27}, journal = {Journal of Computer Graphics Techniques}, volume = {2021}, abstract = {In real-time ray tracing, texture filtering is an important technique to increase image quality. Current games, such as Minecraft with RTX on Windows 10, use ray cones to determine texture-filtering footprints. In this paper, we present several improvements to the ray-cones algorithm that improve image quality and performance and make it easier to adopt in game engines. We show that the total time per frame can decrease by around 10% in a GPU-based path tracer, and we provide a public-domain implementation.}, keywords = {}, pubstate = {published}, tppubtype = {article} } In real-time ray tracing, texture filtering is an important technique to increase image quality. Current games, such as Minecraft with RTX on Windows 10, use ray cones to determine texture-filtering footprints. In this paper, we present several improvements to the ray-cones algorithm that improve image quality and performance and make it easier to adopt in game engines. We show that the total time per frame can decrease by around 10% in a GPU-based path tracer, and we provide a public-domain implementation.

|

2018 |

|

|

A Ray-Box Intersection Algorithm and Efficient Dynamic Voxel Rendering Majercik, Zander; Crassin, Cyril; Shirley, Peter; McGuire, Morgan Journal of Computer Graphics Techniques, 2018. Journal Article @article{MCSM18,

title = {A Ray-Box Intersection Algorithm and Efficient Dynamic Voxel Rendering}, author = {Zander Majercik and Cyril Crassin and Peter Shirley and Morgan McGuire}, url = {https://jcgt.org/published/0007/03/04/paper.pdf, JCGT Paper - Authors Version https://jcgt.org/published/0007/03/04/supplement.zip, Sample Code https://research.nvidia.com/labs/rtr/publication/majercik2018raybox/, NVIDIA Research page}, year = {2018}, date = {2018-09-20}, journal = {Journal of Computer Graphics Techniques}, abstract = {We introduce a novel and efficient method for rendering large models composed of individually-oriented voxels. The core of this method is a new algorithm for computing the intersection point and normal of a 3D ray with an arbitrarily-oriented 3D box, which also has non-rendering applications in GPU physics, such as ray casting and particle collision detection. We measured throughput improvements of 2× to 10× for the intersection operation versus previous ray-box intersection algorithms on GPUs. Applying this to primary rays increases throughput 20× for direct voxel ray tracing with our method versus rasterization of optimal meshes computed from voxels, due to the combined reduction in both computation and bandwidth. Because this method uses no precomputation or spatial data structure, it is suitable for fully dynamic scenes in which every voxel potentially changes every frame. These improvements can enable a dramatic increase in dynamism, view distance, and scene density for visualization applications and voxel games such as LEGO® Worlds and Minecraft. We provide GLSL code for both our algorithm and previous alternative optimized ray-box algorithms, and an Unreal Engine 4 modification for the entire rendering method.}, keywords = {}, pubstate = {published}, tppubtype = {article} } We introduce a novel and efficient method for rendering large models composed of individually-oriented voxels. The core of this method is a new algorithm for computing the intersection point and normal of a 3D ray with an arbitrarily-oriented 3D box, which also has non-rendering applications in GPU physics, such as ray casting and particle collision detection. We measured throughput improvements of 2× to 10× for the intersection operation versus previous ray-box intersection algorithms on GPUs. Applying this to primary rays increases throughput 20× for direct voxel ray tracing with our method versus rasterization of optimal meshes computed from voxels, due to the combined reduction in both computation and bandwidth. Because this method uses no precomputation or spatial data structure, it is suitable for fully dynamic scenes in which every voxel potentially changes every frame. These improvements can enable a dramatic increase in dynamism, view distance, and scene density for visualization applications and voxel games such as LEGO® Worlds and Minecraft. We provide GLSL code for both our algorithm and previous alternative optimized ray-box algorithms, and an Unreal Engine 4 modification for the entire rendering method.

|

|

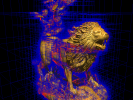

Correlation-Aware Semi-Analytic Visibility for Antialiased Rendering Crassin, Cyril; Wyman, Chris; McGuire, Morgan; Lefohn, Aaron HPG ’18: High-Performance Graphics, 2018. Inproceedings @inproceedings{CWML18,

title = {Correlation-Aware Semi-Analytic Visibility for Antialiased Rendering}, author = {Cyril Crassin and Chris Wyman and Morgan McGuire and Aaron Lefohn}, url = {https://research.nvidia.com/sites/default/files/pubs/2018-08_Correlation-Aware-Semi-Analytic-Visibility//CorrelationAwareVisibility_AuthorsVersion.pdf, Paper - Authors Version /research/publications/CWML18/2018_08_10_Semi-Analytic_Visibility_HPG2018_online.pdf, Presentation (pdf) https://research.nvidia.com/index.php/publication/2018-08_correlation-aware-semi-analytic-visibility-antialiased-rendering, NVIDIA Research page}, year = {2018}, date = {2018-08-10}, booktitle = {HPG ’18: High-Performance Graphics}, abstract = {Geometric aliasing is a persistent challenge for real-time rendering. Hardware multisampling remains limited to 8 × , analytic coverage fails to capture correlated visibility samples, and spatial and temporal postfiltering primarily target edges of superpixel primitives. We describe a novel semi-analytic representation of coverage designed to make progress on geometric antialiasing for subpixel primitives and pixels containing many edges while handling correlated subpixel coverage. Although not yet fast enough to deploy, it crosses three critical thresholds: image quality comparable to 256× MSAA, faster than 64× MSAA, and constant space per pixel.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Geometric aliasing is a persistent challenge for real-time rendering. Hardware multisampling remains limited to 8 × , analytic coverage fails to capture correlated visibility samples, and spatial and temporal postfiltering primarily target edges of superpixel primitives. We describe a novel semi-analytic representation of coverage designed to make progress on geometric antialiasing for subpixel primitives and pixels containing many edges while handling correlated subpixel coverage. Although not yet fast enough to deploy, it crosses three critical thresholds: image quality comparable to 256× MSAA, faster than 64× MSAA, and constant space per pixel.

|

2016 |

|

|

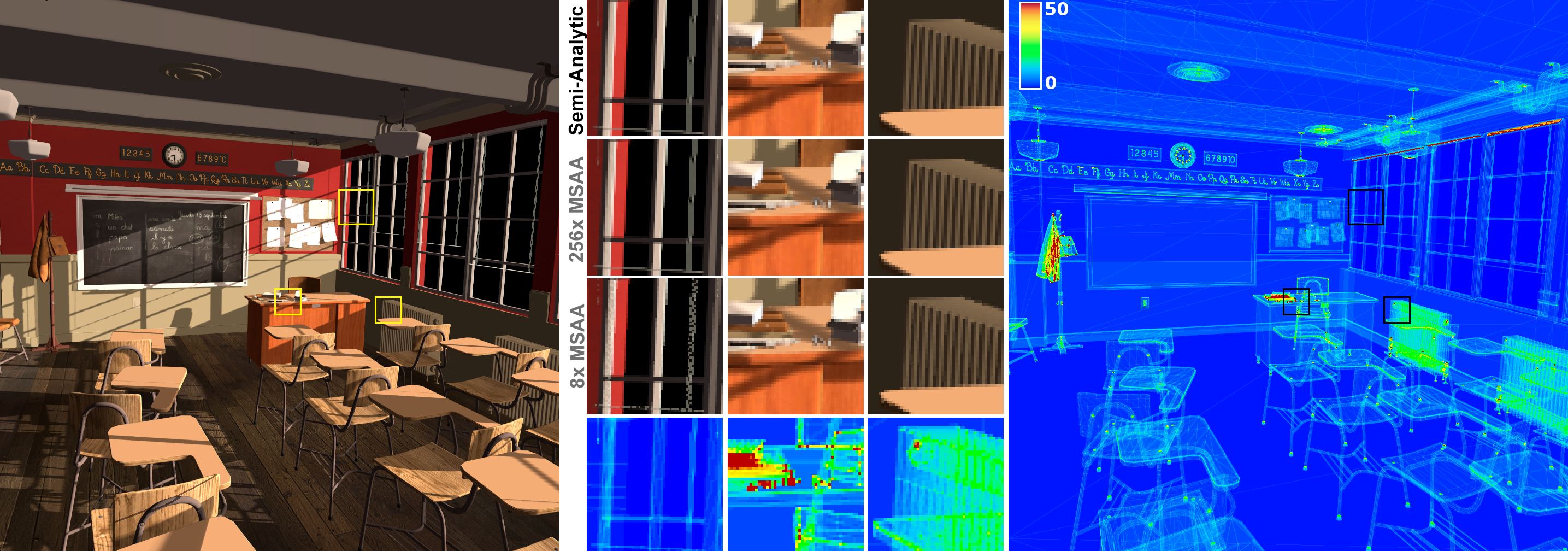

Aggregate G-Buffer Anti-Aliasing in Unreal Engine 4 Bavoil, Louis; Crassin, Cyril Siggraph 2016 Course - Advances in Real-Time Rendering in Games, 2016. Conference @conference{BC16,

title = {Aggregate G-Buffer Anti-Aliasing in Unreal Engine 4}, author = {Louis Bavoil and Cyril Crassin}, url = {/research/publications/BC16/AGAA_UE4_SG2016_slides.pdf, Slides https://research.nvidia.com/sites/default/files/publications/AGAA_UE4_SG2016_6.pdf, Slides with notes https://research.nvidia.com/publication/2016-07_aggregate-g-buffer-anti-aliasing-unreal-engine-4, NVIDIA Research page}, year = {2016}, date = {2016-07-25}, booktitle = {Siggraph 2016 Course - Advances in Real-Time Rendering in Games}, abstract = {In recent years, variants of Temporal Anti-Aliasing (TAA) have become the techniques of choice for fast post-process anti-aliasing, approximating super-sampled AA amortized over multiple frames. While TAA generally greatly improves quality over previous post-process AA algorithms, the approach can also suffer from inherent artifacts, namely ghosting and flickering, in the presence of complex sub-pixel geometry and/or sub-pixel specular highlights. In this talk, we will share our experience from implementing Aggregate G-Buffer Anti-Aliasing (AGAA) in Unreal Engine 4. AGAA approximates deferred lighting from a 4x or 8x super-sampled G-Buffer while maintaining a low shading rate per pixel (at most two lighting evaluations per pixel). This decoupling is achieved by clustering the G-Buffer samples and pre-filtering their geometric and shading attributes into pixel-space aggregates. We will start with a recap of the general benefits of normal map and surface curvature pre-filtering and detail how we have implemented them in the deferred renderer of UE4. We will then present the AGAA technique and its prerequisites in a game engine. We will describe the changes that we made to the engine to implement AGAA (clustering, on-the-fly aggregation, and resolve), the interactions with translucency and HDR lighting, and finally how temporal reprojection can be used with AGAA to further improve image quality.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } In recent years, variants of Temporal Anti-Aliasing (TAA) have become the techniques of choice for fast post-process anti-aliasing, approximating super-sampled AA amortized over multiple frames. While TAA generally greatly improves quality over previous post-process AA algorithms, the approach can also suffer from inherent artifacts, namely ghosting and flickering, in the presence of complex sub-pixel geometry and/or sub-pixel specular highlights. In this talk, we will share our experience from implementing Aggregate G-Buffer Anti-Aliasing (AGAA) in Unreal Engine 4. AGAA approximates deferred lighting from a 4x or 8x super-sampled G-Buffer while maintaining a low shading rate per pixel (at most two lighting evaluations per pixel). This decoupling is achieved by clustering the G-Buffer samples and pre-filtering their geometric and shading attributes into pixel-space aggregates. We will start with a recap of the general benefits of normal map and surface curvature pre-filtering and detail how we have implemented them in the deferred renderer of UE4. We will then present the AGAA technique and its prerequisites in a game engine. We will describe the changes that we made to the engine to implement AGAA (clustering, on-the-fly aggregation, and resolve), the interactions with translucency and HDR lighting, and finally how temporal reprojection can be used with AGAA to further improve image quality.

|

2015 |

|

|

The SGGX microflake distribution Heitz, Eric; Dupuy, Jonathan; Crassin, Cyril; Dachsbacher, Carsten ACM Transactions on Graphics (TOG) - Proceedings of ACM SIGGRAPH 2015, 2015. Journal Article @article{HDCD15,

title = {The SGGX microflake distribution}, author = {Eric Heitz and Jonathan Dupuy and Cyril Crassin and Carsten Dachsbacher}, url = {https://research.nvidia.com/sites/default/files/pubs/2015-08_The-SGGX-microflake/sggx.pdf, Paper authors version https://dl.acm.org/doi/suppl/10.1145/2766988/suppl_file/a48-heitz.zip, Supplemental material https://dl.acm.org/doi/suppl/10.1145/2766988/suppl_file/a48.mp4, Presentation video https://dl.acm.org/doi/10.1145/2766988, ACM page https://research.nvidia.com/publication/2015-08_sggx-microflake-distribution, NVIDIA Research page}, year = {2015}, date = {2015-08-01}, journal = {ACM Transactions on Graphics (TOG) - Proceedings of ACM SIGGRAPH 2015}, abstract = {We introduce the Symmetric GGX (SGGX) distribution to represent spatially-varying properties of anisotropic microflake participating media. Our key theoretical insight is to represent a microflake distribution by the projected area of the microflakes. We use the projected area to parameterize the shape of an ellipsoid, from which we recover a distribution of normals. The representation based on the projected area allows for robust linear interpolation and prefiltering, and thanks to its geometric interpretation, we derive closed form expressions for all operations used in the microflake framework. We also incorporate microflakes with diffuse reflectance in our theoretical framework. This allows us to model the appearance of rough diffuse materials in addition to rough specular materials. Finally, we use the idea of sampling the distribution of visible normals to design a perfect importance sampling technique for our SGGX microflake phase functions. It is analytic, deterministic, simple to implement, and one order of magnitude faster than previous work.}, keywords = {}, pubstate = {published}, tppubtype = {article} } We introduce the Symmetric GGX (SGGX) distribution to represent spatially-varying properties of anisotropic microflake participating media. Our key theoretical insight is to represent a microflake distribution by the projected area of the microflakes. We use the projected area to parameterize the shape of an ellipsoid, from which we recover a distribution of normals. The representation based on the projected area allows for robust linear interpolation and prefiltering, and thanks to its geometric interpretation, we derive closed form expressions for all operations used in the microflake framework. We also incorporate microflakes with diffuse reflectance in our theoretical framework. This allows us to model the appearance of rough diffuse materials in addition to rough specular materials. Finally, we use the idea of sampling the distribution of visible normals to design a perfect importance sampling technique for our SGGX microflake phase functions. It is analytic, deterministic, simple to implement, and one order of magnitude faster than previous work.

|

|

|

Aggregate G-Buffer Anti-Aliasing Crassin, Cyril ; McGuire, Morgan ; Fatahalian, Kayvon ; Lefohn, Aaron ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D) -- IEEE Transactions on Visualization and Computer Graphics (TVCG) - October 2016, ACM, IEEE, 2015. Inproceedings @inproceedings{CMFL15,

title = {Aggregate G-Buffer Anti-Aliasing}, author = {Crassin, Cyril and McGuire, Morgan and Fatahalian, Kayvon and Lefohn, Aaron}, url = {http://research.nvidia.com/publication/aggregate-g-buffer-anti-aliasing, NVIDIA Research page http://dl.acm.org/citation.cfm?id=2699285, ACM Library page http://www.icare3d.org/research/publications/CMFL15/AGAA_Extended_TVCG2016_AuthorsVersion.pdf, TVCG Extended version - October 2016 - Authors Version http://research.nvidia.com/sites/default/files/publications/AGAA_I3D2015_authors.pdf, Paper authors version http://www.icare3d.org/research/publications/CMFL15/AGAA_I3D2015_Final_Web.pdf, Slides PDF http://www.icare3d.org/research/publications/CMFL15/AGAA_I3D2015_Final_Web.pptx, Slides PPTX http://files.icare3d.org/AGAA/AGAA_I3D15_HighQ.mkv, High quality video (MKV)}, year = {2015}, date = {2015-02-10}, booktitle = {ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D) -- IEEE Transactions on Visualization and Computer Graphics (TVCG) - October 2016}, journal = {ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D)}, publisher = {ACM, IEEE}, abstract = {We present Aggregate G-Buffer Anti-Aliasing (AGAA), a new technique for efficient anti-aliased deferred rendering of complex geometry using modern graphics hardware. In geometrically complex situations where many surfaces intersect a pixel, current rendering systems shade each contributing surface at least once per pixel. As the sample density and geometric complexity increase, the shading cost becomes prohibitive for real-time rendering. Under deferred shading, so does the required framebuffer memory. Our goal is to make high per-pixel sampling rates practical for real-time applications by substantially reducing shading costs and per-pixel storage compared to traditional deferred shading. AGAA uses the rasterization pipeline to generate a compact, pre-filtered geometric representation inside each pixel. We shade this representation at a fixed rate, independent of geometric complexity. By decoupling shading rate from geometric sampling rate, the algorithm reduces the storage and bandwidth costs of a geometry buffer, and allows scaling to high visibility sampling rates for anti-aliasing. AGAA with 2 aggregates per-pixel generates results comparable to 32x MSAA, but requires 54% less memory and is up to 2.6x faster (~30% less memory and 1.7x faster for 8x MSAA).}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } We present Aggregate G-Buffer Anti-Aliasing (AGAA), a new technique for efficient anti-aliased deferred rendering of complex geometry using modern graphics hardware. In geometrically complex situations where many surfaces intersect a pixel, current rendering systems shade each contributing surface at least once per pixel. As the sample density and geometric complexity increase, the shading cost becomes prohibitive for real-time rendering. Under deferred shading, so does the required framebuffer memory. Our goal is to make high per-pixel sampling rates practical for real-time applications by substantially reducing shading costs and per-pixel storage compared to traditional deferred shading. AGAA uses the rasterization pipeline to generate a compact, pre-filtered geometric representation inside each pixel. We shade this representation at a fixed rate, independent of geometric complexity. By decoupling shading rate from geometric sampling rate, the algorithm reduces the storage and bandwidth costs of a geometry buffer, and allows scaling to high visibility sampling rates for anti-aliasing. AGAA with 2 aggregates per-pixel generates results comparable to 32x MSAA, but requires 54% less memory and is up to 2.6x faster (~30% less memory and 1.7x faster for 8x MSAA).

|

2013 |

|

|

CloudLight: A system for amortizing indirect lighting in real-time rendering Crassin, Cyril; Luebke, David; Mara, Michael; McGuire, Morgan; Oster, Brent; Shirley, Peter; Sloan, Peter-Pike; Wyman, Chris 2013. Technical Report @techreport{CLMMOSSW13,

title = {CloudLight: A system for amortizing indirect lighting in real-time rendering}, author = {Cyril Crassin and David Luebke and Michael Mara and Morgan McGuire and Brent Oster and Peter Shirley and Peter-Pike Sloan and Chris Wyman}, url = {https://research.nvidia.com/publication/cloudlight-system-amortizing-indirect-lighting-real-time-rendering/, NVIDIA Research page http://graphics.cs.williams.edu/papers/CloudLight13/Crassin13Cloud.pdf, Technical report http://graphics.cs.williams.edu/papers/CloudLight13/CloudLightSIGGRAPH13.pptx, Siggraph\'13 slides http://graphics.cs.williams.edu/papers/CloudLight13/CloudLightTechReport13.mp4, Video results}, year = {2013}, date = {2013-07-01}, abstract = {We introduce CloudLight, a system for computing indirect lighting in the Cloud to support real-time rendering for interactive 3D applications on a user's local device. CloudLight maps the traditional graphics pipeline onto a distributed system. That differs from a single-machine renderer in three fundamental ways. First, the mapping introduces potential asymmetry between computational resources available at the Cloud and local device sides of the pipeline. Second, compared to a hardware memory bus, the network introduces relatively large latency and low bandwidth between certain pipeline stages. Third, for multi-user virtual environments, a Cloud solution can amortize expensive global illumination costs across users. Our new CloudLight framework explores tradeoffs in different partitions of the global illumination workload between Cloud and local devices, with an eye to how available network and computational power influence design decisions and image quality. We describe the tradeoffs and characteristics of mapping three known lighting algorithms to our system and demonstrate scaling for up to 50 simultaneous CloudLight users.}, keywords = {}, pubstate = {published}, tppubtype = {techreport} } We introduce CloudLight, a system for computing indirect lighting in the Cloud to support real-time rendering for interactive 3D applications on a user's local device. CloudLight maps the traditional graphics pipeline onto a distributed system. That differs from a single-machine renderer in three fundamental ways. First, the mapping introduces potential asymmetry between computational resources available at the Cloud and local device sides of the pipeline. Second, compared to a hardware memory bus, the network introduces relatively large latency and low bandwidth between certain pipeline stages. Third, for multi-user virtual environments, a Cloud solution can amortize expensive global illumination costs across users. Our new CloudLight framework explores tradeoffs in different partitions of the global illumination workload between Cloud and local devices, with an eye to how available network and computational power influence design decisions and image quality. We describe the tradeoffs and characteristics of mapping three known lighting algorithms to our system and demonstrate scaling for up to 50 simultaneous CloudLight users.

|

2012 |

|

|

Dynamic Sparse Voxel Octrees for Next-Gen Real-Time Rendering Crassin, Cyril SIGGRAPH 2012 Course : Beyond Programmable Shading, ACM SIGGRAPH, 2012. Inproceedings @inproceedings{Cra12,

title = {Dynamic Sparse Voxel Octrees for Next-Gen Real-Time Rendering}, author = {Cyril Crassin}, url = {http://www.icare3d.org/research/publications/Cra12/04_crassinVoxels_bps2012.pdf, Slides as PDF https://web.archive.org/web/20171120033835/http://bps12.idav.ucdavis.edu/, Beyond Programmable Shading SIGGRAPH 2012 Webpage}, year = {2012}, date = {2012-08-07}, booktitle = {SIGGRAPH 2012 Course : Beyond Programmable Shading}, publisher = {ACM SIGGRAPH}, abstract = {Sparse Voxel Octrees have gained a growing interest in the industry over the last few years. In this course, I show how SVOs allow building and storing a multi-resolution pre-filtered representation of a scene's geometry. I also introduce the Voxel Cone Tracing (VCT) technique, which can be used to efficiently integrate visibility and evaluate light transport inside a scene, by replacing massive oversampling inside a cone-shaped footprint, by only one single ray casted inside the pre-filtered voxel representation (adapting the geometric resolution to the sampling resolution). Finally, I show multiple use-cases of such techniques for real-time applications : Rendering massive and highly detailed scenes without any aliasing artifacts, highly controllable multi-bounces global illumination, simulating depth-of-field effects, approximated soft shadows, fully procedural content generation... }, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Sparse Voxel Octrees have gained a growing interest in the industry over the last few years. In this course, I show how SVOs allow building and storing a multi-resolution pre-filtered representation of a scene's geometry. I also introduce the Voxel Cone Tracing (VCT) technique, which can be used to efficiently integrate visibility and evaluate light transport inside a scene, by replacing massive oversampling inside a cone-shaped footprint, by only one single ray casted inside the pre-filtered voxel representation (adapting the geometric resolution to the sampling resolution). Finally, I show multiple use-cases of such techniques for real-time applications : Rendering massive and highly detailed scenes without any aliasing artifacts, highly controllable multi-bounces global illumination, simulating depth-of-field effects, approximated soft shadows, fully procedural content generation...

|

|

Octree-Based Sparse Voxelization Using The GPU Hardware Rasterizer Crassin, Cyril ; Green, Simon OpenGL Insights, CRC Press, Patrick Cozzi and Christophe Riccio, 2012. Book Chapter @inbook{CG12,

title = {Octree-Based Sparse Voxelization Using The GPU Hardware Rasterizer}, author = {Crassin, Cyril and Green, Simon}, url = {https://web.archive.org/web/20210308020633/https://www.seas.upenn.edu/~pcozzi/OpenGLInsights/OpenGLInsights-SparseVoxelization.pdf, Chapter PDF https://blog.icare3d.org/2012/05/gtc-2012-talk-octree-based-sparse.html, GTC 2012 Talk /research/publications/CG12/S0610-Octree-Based-Sparse-Voxelization-for-RT-Global-Illumination.flv, GTC 2012 Talk Video /research/publications/CG12/GTC2012_Voxelization_public.pdf, GTC 2012 Slides PDF /research/publications/CG12/GTC2012_Voxelization_public.pptx, GTC 2012 Slides PPTX https://openglinsights.com/, OpenGL Insights Website}, year = {2012}, date = {2012-07-01}, booktitle = {OpenGL Insights}, publisher = {CRC Press, Patrick Cozzi and Christophe Riccio}, abstract = {Discrete voxel representations are generating growing interest in a wide range of applications in computational sciences and particularly in computer graphics. In this chapter, we first describe an efficient OpenGL implementation of a simple surface voxelization algorithm that produces a regular 3D texture. This technique uses the GPU hardware rasterizer and the new image load/store interface exposed by OpenGL 4.2. The first part of this chapter will allow to familiarize the reader with the general algorithm and the new OpenGL features we leverage. In the second part we describe an extension of this approach, which enables building and updating a sparse voxel representation in the form of an octree structure. In order to scale to very large scenes, our approach avoids relying on an intermediate full regular grid to build the structure and constructs the octree directly. This second approach exploits the draw indirect features standardized in OpenGL 4.0 in order to allow synchronization-free launching of shader threads during the octree construction, as well as the new atomic counter functions exposed in OpenGL 4.2.}, keywords = {}, pubstate = {published}, tppubtype = {inbook} } Discrete voxel representations are generating growing interest in a wide range of applications in computational sciences and particularly in computer graphics. In this chapter, we first describe an efficient OpenGL implementation of a simple surface voxelization algorithm that produces a regular 3D texture. This technique uses the GPU hardware rasterizer and the new image load/store interface exposed by OpenGL 4.2.

The first part of this chapter will allow to familiarize the reader with the general algorithm and the new OpenGL features we leverage. In the second part we describe an extension of this approach, which enables building and updating a sparse voxel representation in the form of an octree structure. In order to scale to very large scenes, our approach avoids relying on an intermediate full regular grid to build the structure and constructs the octree directly. This second approach exploits the draw indirect features standardized in OpenGL 4.0 in order to allow synchronization-free launching of shader threads during the octree construction, as well as the new atomic counter functions exposed in OpenGL 4.2. |

2011 |

|

|

Interactive Indirect Illumination Using Voxel Cone Tracing Crassin, Cyril ; Neyret, Fabrice ; Sainz, Miguel ; Green, Simon ; Eisemann, Elmar Computer Graphics Forum (Proc. of Pacific Graphics 2011), 2011. Journal Article @article{CNSGE11b,

title = {Interactive Indirect Illumination Using Voxel Cone Tracing}, author = {Crassin, Cyril and Neyret, Fabrice and Sainz, Miguel and Green, Simon and Eisemann, Elmar}, url = {http://research.nvidia.com/publication/interactive-indirect-illumination-using-voxel-cone-tracing, NVIDIA publication webpage http://maverick.inria.fr/Publications/2011/CNSGE11b/, INRIA publication webpage http://www.icare3d.org/research/publications/CNSGE11b/GIVoxels-pg2011-authors.pdf, Paper authors version http://research.nvidia.com/sites/default/files/publications/GIVoxels_Siggraph2011_web.pptx, Siggraph 2011 Talk}, year = {2011}, date = {2011-09-01}, booktitle = {Computer Graphics Forum (Proc. of Pacific Graphics 2011)}, journal = {Computer Graphics Forum (Proc. of Pacific Graphics 2011)}, abstract = {Indirect illumination is an important element for realistic image synthesis, but its computation is expensive and highly dependent on the complexity of the scene and of the BRDF of the involved surfaces. While off-line computation and pre-baking can be acceptable for some cases, many applications (games, simulators, etc.) require real-time or interactive approaches to evaluate indirect illumination. We present a novel algorithm to compute indirect lighting in real-time that avoids costly precomputation steps and is not restricted to low-frequency illumination. It is based on a hierarchical voxel octree representation generated and updated on the fly from a regular scene mesh coupled with an approximate voxel cone tracing that allows for a fast estimation of the visibility and incoming energy. Our approach can manage two light bounces for both Lambertian and glossy materials at interactive framerates (25-70FPS). It exhibits an almost scene-independent performance and can handle complex scenes with dynamic content thanks to an interactive octree-voxelization scheme. In addition, we demonstrate that our voxel cone tracing can be used to efficiently estimate Ambient Occlusion. }, keywords = {}, pubstate = {published}, tppubtype = {article} } Indirect illumination is an important element for realistic image synthesis, but its computation is expensive and highly dependent on the complexity of the scene and of the BRDF of the involved surfaces. While off-line computation and pre-baking can be acceptable for some cases, many applications (games, simulators, etc.) require real-time or interactive approaches to evaluate indirect illumination. We present a novel algorithm to compute indirect lighting in real-time that avoids costly precomputation steps and is not restricted to low-frequency illumination.

It is based on a hierarchical voxel octree representation generated and updated on the fly from a regular scene mesh coupled with an approximate voxel cone tracing that allows for a fast estimation of the visibility and incoming energy. Our approach can manage two light bounces for both Lambertian and glossy materials at interactive framerates (25-70FPS). It exhibits an almost scene-independent performance and can handle complex scenes with dynamic content thanks to an interactive octree-voxelization scheme. In addition, we demonstrate that our voxel cone tracing can be used to efficiently estimate Ambient Occlusion. |

|

Interactive Indirect Illumination Using Voxel Cone Tracing: An Insight Crassin, Cyril ; Neyret, Fabrice ; Sainz, Miguel ; Green, Simon ; Eisemann, Elmar SIGGRAPH 2011 : Technical Talk, ACM SIGGRAPH, 2011. Inproceedings @inproceedings{CNSGE11a,

title = {Interactive Indirect Illumination Using Voxel Cone Tracing: An Insight}, author = {Crassin, Cyril and Neyret, Fabrice and Sainz, Miguel and Green, Simon and Eisemann, Elmar}, url = {http://maverick.inria.fr/Publications/2011/CNSGE11a/, Talk INRIA webpage http://maverick.inria.fr/Publications/2011/CNSGE11a/GIVoxels_Siggraph_Talk.pdf, Slides PDF http://maverick.inria.fr/Publications/2011/CNSGE11a/GIVoxels_Siggraph2011.ppt, Slides PPT}, year = {2011}, date = {2011-08-07}, booktitle = {SIGGRAPH 2011 : Technical Talk}, publisher = {ACM SIGGRAPH}, abstract = {Indirect illumination is an important element for realistic image synthesis, but its computation is expensive and highly dependent on the complexity of the scene and of the BRDF of the surfaces involved. While off-line computation and pre-baking can be acceptable for some cases, many applications (games, simulators, etc.) require real-time or interactive approaches to evaluate indirect illumination. We present a novel algorithm to compute indirect lighting in real-time that avoids costly precomputation steps and is not restricted to low frequency illumination. It is based on a hierarchical voxel octree representation generated and updated on-the-fly from a regular scene mesh coupled with an approximate voxel cone tracing that allows a fast estimation of the visibility and incoming energy. Our approach can manage two light bounces for both Lambertian and Glossy materials at interactive framerates (25-70FPS). It exhibits an almost scene-independent performance and allows for fully dynamic content thanks to an interactive octree voxelization scheme. In addition, we demonstrate that our voxel cone tracing can be used to efficiently estimate Ambient Occlusion}, howpublished = {SIGGRAPH 2011 : Technical Talk}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Indirect illumination is an important element for realistic image synthesis, but its computation is expensive and highly dependent on the complexity of the scene and of the BRDF of the surfaces involved. While off-line computation and pre-baking can be acceptable for some cases, many applications (games, simulators, etc.) require real-time or interactive approaches to evaluate indirect illumination.

We present a novel algorithm to compute indirect lighting in real-time that avoids costly precomputation steps and is not restricted to low frequency illumination. It is based on a hierarchical voxel octree representation generated and updated on-the-fly from a regular scene mesh coupled with an approximate voxel cone tracing that allows a fast estimation of the visibility and incoming energy. Our approach can manage two light bounces for both Lambertian and Glossy materials at interactive framerates (25-70FPS). It exhibits an almost scene-independent performance and allows for fully dynamic content thanks to an interactive octree voxelization scheme. In addition, we demonstrate that our voxel cone tracing can be used to efficiently estimate Ambient Occlusion |

|

GigaVoxels: A Voxel-Based Rendering Pipeline For Efficient Exploration Of Large And Detailed Scenes Crassin, Cyril Grenoble University, 2011. PhD Thesis @phdthesis{Cra11,

title = {GigaVoxels: A Voxel-Based Rendering Pipeline For Efficient Exploration Of Large And Detailed Scenes}, author = {Crassin, Cyril}, url = {http://maverick.inria.fr/Membres/Cyril.Crassin/thesis/CCrassinThesis_EN_Web.pdf, Thesis http://maverick.inria.fr/Publications/2011/Cra11/, INRIA Publication Page}, year = {2011}, date = {2011-07-12}, school = {Grenoble University}, abstract = {In this thesis, we present a new approach to efficiently render large scenes and detailed objects in real-time. Our approach is based on a new volumetric pre-filtered geometry representation and an associated voxel-based approximate cone tracing that allows an accurate and high performance rendering with high quality filtering of highly detailed geometry. In order to bring this voxel representation as a standard real-time rendering primitive, we propose a new GPU-based approach designed to entirely scale to the rendering of very large volumetric datasets. Our system achieves real-time rendering performance for several billion voxels. Our data structure exploits the fact that in CG scenes, details are often concentrated on the interface between free space and clusters of density and shows that volumetric models might become a valuable alternative as a rendering primitive for real-time applications. In this spirit, we allow a quality/performance trade-off and exploit temporal coherence. Our solution is based on an adaptive hierarchical data representation depending on the current view and occlusion information, coupled to an efficient ray-casting rendering algorithm. We introduce a new GPU cache mechanism providing a very efficient paging of data in video memory and implemented as a very efficient data-parallel process. This cache is coupled with a data production pipeline able to dynamically load or produce voxel data directly on the GPU. One key element of our method is to guide data production and caching in video memory directly based on data requests and usage information emitted directly during rendering. We demonstrate our approach with several applications. We also show how our pre-filtered geometry model and approximate cone tracing can be used to very efficiciently achieve blurry effects and real-time indirect lighting.}, keywords = {}, pubstate = {published}, tppubtype = {phdthesis} } In this thesis, we present a new approach to efficiently render large scenes and detailed objects in real-time. Our approach is based on a new volumetric pre-filtered geometry representation and an associated voxel-based approximate cone tracing that allows an accurate and high performance rendering with high quality filtering of highly detailed geometry. In order to bring this voxel representation as a standard real-time rendering primitive, we propose a new GPU-based approach designed to entirely scale to the rendering of very large volumetric datasets.

Our system achieves real-time rendering performance for several billion voxels. Our data structure exploits the fact that in CG scenes, details are often concentrated on the interface between free space and clusters of density and shows that volumetric models might become a valuable alternative as a rendering primitive for real-time applications. In this spirit, we allow a quality/performance trade-off and exploit temporal coherence. Our solution is based on an adaptive hierarchical data representation depending on the current view and occlusion information, coupled to an efficient ray-casting rendering algorithm. We introduce a new GPU cache mechanism providing a very efficient paging of data in video memory and implemented as a very efficient data-parallel process. This cache is coupled with a data production pipeline able to dynamically load or produce voxel data directly on the GPU. One key element of our method is to guide data production and caching in video memory directly based on data requests and usage information emitted directly during rendering. We demonstrate our approach with several applications. We also show how our pre-filtered geometry model and approximate cone tracing can be used to very efficiciently achieve blurry effects and real-time indirect lighting. |

|

Interactive Indirect Illumination Using Voxel Cone Tracing: A Preview Crassin, Cyril ; Neyret, Fabrice ; Sainz, Miguel ; Green, Simon ; Eisemann, Elmar Poster ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D). Best Poster Award., 2011. Inproceedings @inproceedings{CNSGE11,

title = {Interactive Indirect Illumination Using Voxel Cone Tracing: A Preview}, author = {Crassin, Cyril and Neyret, Fabrice and Sainz, Miguel and Green, Simon and Eisemann, Elmar}, url = {http://artis.imag.fr/Publications/2011/CNSGE11, INRIA Publication Webpage http://maverick.inria.fr/Publications/2011/CNSGE11/I3D2011_Poster_web.pdf, Poster PDF}, year = {2011}, date = {2011-02-19}, booktitle = {Poster ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D). Best Poster Award.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } |

2010 |

|

|

Efficient Rendering of Highly Detailed Volumetric Scenes with GigaVoxels Crassin, Cyril ; Neyret, Fabrice ; Sainz, Miguel ; Eisemann, Elmar GPU Pro, pp. 643–676, A K Peters, 2010. Book Chapter @inbook{CNSE10,

title = {Efficient Rendering of Highly Detailed Volumetric Scenes with GigaVoxels}, author = {Crassin, Cyril and Neyret, Fabrice and Sainz, Miguel and Eisemann, Elmar}, url = {http://artis.imag.fr/Publications/2010/CNSE10, INRIA Publication Page }, year = {2010}, date = {2010-07-01}, booktitle = {GPU Pro}, pages = {643--676}, publisher = {A K Peters}, abstract = {GigaVoxels is a voxel-based rendering pipeline that makes the display of very large volumetric datasets very efficient. It is adapted to memory bound environments and it is designed for the data-parallel architecture of the GPU. It is capable of rendering objects at a detail level that matches the screen resolution and interactively adapts to the current point of view. Invisible parts are never even considered for contribution to the final image. As a result, the algorithm obtains interactive to real-time framerates and demonstrates the use of extreme amounts of voxels in rendering, which is applicable in many different contexts. This is also confirmed by many game developers who seriously consider voxels as a potential standard primitive in video games. We will also show in this chapter that voxels are already powerful primitives that, for some rendering tasks, achieve higher performance than triangle-based representations.}, keywords = {}, pubstate = {published}, tppubtype = {inbook} } GigaVoxels is a voxel-based rendering pipeline that makes the display of very large volumetric datasets very efficient. It is adapted to memory bound environments and it is designed for the data-parallel architecture of the GPU. It is capable of rendering objects at a detail level that matches the screen resolution and interactively adapts to the current point of view. Invisible parts are never even considered for contribution to the final image.

As a result, the algorithm obtains interactive to real-time framerates and demonstrates the use of extreme amounts of voxels in rendering, which is applicable in many different contexts. This is also confirmed by many game developers who seriously consider voxels as a potential standard primitive in video games. We will also show in this chapter that voxels are already powerful primitives that, for some rendering tasks, achieve higher performance than triangle-based representations. |

2009 |

|

|

GigaVoxels: Voxels Come Into Play Crassin, Cyril 2009. Miscellaneous @misc{Cra09,

title = {GigaVoxels: Voxels Come Into Play}, author = {Crassin, Cyril}, url = {http://artis.imag.fr/Publications/2009/Cra09, INRIA Webpage http://maverick.inria.fr/Publications/2009/Cra09/GigaVoxels_Crytek_web.ppt, Talk PPT}, year = {2009}, date = {2009-11-01}, booktitle = {Crytek Conference Talk. Crytek GmbH. Frankfurt, Germany}, journal = {Crytek Conference Talk. Crytek GmbH. Frankfurt, Germany}, keywords = {}, pubstate = {published}, tppubtype = {misc} } |

|

Beyond Triangles : GigaVoxels Effects In Video Games Crassin, Cyril ; Neyret, Fabrice ; Lefebvre, Sylvain ; Sainz, Miguel ; Eisemann, Elmar SIGGRAPH 2009 : Technical Talk + Poster (Best Poster Award Finalist), ACM SIGGRAPH, 2009. Inproceedings @inproceedings{CNLSE09,

title = {Beyond Triangles : GigaVoxels Effects In Video Games}, author = {Crassin, Cyril and Neyret, Fabrice and Lefebvre, Sylvain and Sainz, Miguel and Eisemann, Elmar}, url = {http://artis.imag.fr/Publications/2009/CNLSE09, Talk INRIA Webpage http://maverick.inria.fr/Publications/2009/CNLSE09/GigaVoxels_Siggraph09_Slides.pdf, Slides PDF http://maverick.inria.fr/Publications/2009/CNLSE09/gigavoxels_siggraph09_talk.pdf, Sketch PDF}, year = {2009}, date = {2009-08-01}, booktitle = {SIGGRAPH 2009 : Technical Talk + Poster (Best Poster Award Finalist)}, publisher = {ACM SIGGRAPH}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } |

|

Building with Bricks: Cuda-based Gigavoxel Rendering Crassin, Cyril ; Neyret, Fabrice ; Eisemann, Elmar Intel Visual Computing Research Conference, 2009. Inproceedings @inproceedings{CNE09,

title = {Building with Bricks: Cuda-based Gigavoxel Rendering}, author = {Crassin, Cyril and Neyret, Fabrice and Eisemann, Elmar}, url = {http://artis.imag.fr/Publications/2009/CNE09, INRIA Webpage /research/publications/CNE09/IntelConf_Final.pdf, Paper Authors Version}, year = {2009}, date = {2009-03-01}, booktitle = {Intel Visual Computing Research Conference}, journal = {Intel Visual Computing Research Conference}, abstract = {For a long time, triangles have been considered the state-of-sthe-art primitive for fast interactive applications. Only recently, with the dawn of programmability of graphics cards, different representations emerged. Especially for complex entities, triangles have difficulties in representing convincing details and faithful approximations quickly become costly. In this work we investigate Voxels. Voxels can represent very rich and detailed objects and are of crucial importance in medical contexts. Nonetheless, one major downside is their significant memory consumption. Here, we propose an out-of-core method to deal with large volumes in real-time. Only little CPU interaction is needed which shifts the workload towards the GPU. This makes the use of large voxel data sets even easier than the, usually complicated, triangle-based LOD mechanisms that often rely on the CPU. This simplicity might even foreshadow the use of volume data, in game contexts. The latter we underline by presenting very efficient algorithms to approximate standard effects, such as soft shadows, or depth of field.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } For a long time, triangles have been considered the state-of-sthe-art primitive for fast interactive applications.

Only recently, with the dawn of programmability of graphics cards, different representations emerged. Especially for complex entities, triangles have difficulties in representing convincing details and faithful approximations quickly become costly. In this work we investigate Voxels. Voxels can represent very rich and detailed objects and are of crucial importance in medical contexts. Nonetheless, one major downside is their significant memory consumption. Here, we propose an out-of-core method to deal with large volumes in real-time. Only little CPU interaction is needed which shifts the workload towards the GPU. This makes the use of large voxel data sets even easier than the, usually complicated, triangle-based LOD mechanisms that often rely on the CPU. This simplicity might even foreshadow the use of volume data, in game contexts. The latter we underline by presenting very efficient algorithms to approximate standard effects, such as soft shadows, or depth of field. |

|

GigaVoxels : Ray-Guided Streaming for Efficient and Detailed Voxel Rendering Crassin, Cyril ; Neyret, Fabrice ; Lefebvre, Sylvain ; Eisemann, Elmar ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D), ACM, 2009. Inproceedings @inproceedings{CNLE09,

title = {GigaVoxels : Ray-Guided Streaming for Efficient and Detailed Voxel Rendering}, author = {Crassin, Cyril and Neyret, Fabrice and Lefebvre, Sylvain and Eisemann, Elmar}, url = {http://artis.imag.fr/Publications/2009/CNLE09, INRIA Paper Page http://maverick.inria.fr/Publications/2009/CNLE09/CNLE09.pdf, Paper authors version}, year = {2009}, date = {2009-02-01}, booktitle = {ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D)}, publisher = {ACM}, abstract = {We propose a new approach to efficiently render large volumetric data sets. The system achieves interactive to real-time rendering performance for several billion voxels. Our solution is based on an adaptive data representation depending on the current view and occlusion information, coupled to an efficient ray-casting rendering algorithm. One key element of our method is to guide data production and streaming directly based on information extracted during rendering. Our data structure exploits the fact that in CG scenes, details are often concentrated on the interface between free space and clusters of density and shows that volumetric models might become a valuable alternative as a rendering primitive for real-time applications. In this spirit, we allow a quality/performance trade-off and exploit temporal coherence. We also introduce a mipmapping-like process that allows for an increased display rate and better quality through high quality filtering. To further enrich the data set, we create additional details through a variety of procedural methods. We demonstrate our approach in several scenarios, like the exploration of a 3D scan (81923 resolution), of hypertextured meshes (163843 virtual resolution), or of a fractal (theoretically infinite resolution). All examples are rendered on current generation hardware at 20-90 fps and respect the limited GPU memory budget.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } We propose a new approach to efficiently render large volumetric data sets. The system achieves interactive to real-time rendering performance for several billion voxels. Our solution is based on an adaptive data representation depending on the current view and occlusion information, coupled to an efficient ray-casting rendering algorithm. One key element of our method is to guide data production and streaming directly based on information extracted during rendering.

Our data structure exploits the fact that in CG scenes, details are often concentrated on the interface between free space and clusters of density and shows that volumetric models might become a valuable alternative as a rendering primitive for real-time applications. In this spirit, we allow a quality/performance trade-off and exploit temporal coherence. We also introduce a mipmapping-like process that allows for an increased display rate and better quality through high quality filtering. To further enrich the data set, we create additional details through a variety of procedural methods. We demonstrate our approach in several scenarios, like the exploration of a 3D scan (81923 resolution), of hypertextured meshes (163843 virtual resolution), or of a fractal (theoretically infinite resolution). All examples are rendered on current generation hardware at 20-90 fps and respect the limited GPU memory budget. |

2008 |

|

|

Interactive Multiple Anisotropic Scattering In Clouds Bouthors, Antoine ; Neyret, Fabrice ; Max, Nelson ; Bruneton, Eric ; Crassin, Cyril ACM Symposium on Interactive 3D Graphics and Games (I3D), 2008. Inproceedings @inproceedings{BNMBC08,

title = {Interactive Multiple Anisotropic Scattering In Clouds}, author = {Bouthors, Antoine and Neyret, Fabrice and Max, Nelson and Bruneton, Eric and Crassin, Cyril}, url = {http://www-evasion.imag.fr/Publications/2008/BNMBC08, INRIA Publication Page http://maverick.inria.fr/Publications/2008/BNMBC08/cloudsFINAL.pdf, Paper authors version}, year = {2008}, date = {2008-02-01}, booktitle = {ACM Symposium on Interactive 3D Graphics and Games (I3D)}, abstract = {We propose an algorithm for the real time realistic simulation of multiple anisotropic scattering of light in a volume. Contrary to previous real-time methods we account for all kinds of light paths through the medium and preserve their anisotropic behavior. Our approach consists of estimating the energy transport from the illuminated cloud surface to the rendered cloud pixel for each separate order of multiple scattering. We represent the distribution of light paths reaching a given viewed cloud pixel with the mean and standard deviation of their entry points on the lit surface, which we call the collector area. At rendering time for each pixel we determine the collector area on the lit cloud surface for different sets of scattering orders, then we infer the associated light transport. The fast computation of the collector area and light transport is possible thanks to a preliminary analysis of multiple scattering in planeparallel slabs and does not require slicing or marching through the volume. Rendering is done efficiently in a shader on the GPU, relying on a cloud surface mesh augmented with a Hypertexture to enrich the shape and silhouette. We demonstrate our model with the interactive rendering of detailed animated cumulus and cloudy sky at 2-10 frames per second.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } We propose an algorithm for the real time realistic simulation of multiple anisotropic scattering of light in a volume. Contrary to previous real-time methods we account for all kinds of light paths through the medium and preserve their anisotropic behavior.

Our approach consists of estimating the energy transport from the illuminated cloud surface to the rendered cloud pixel for each separate order of multiple scattering. We represent the distribution of light paths reaching a given viewed cloud pixel with the mean and standard deviation of their entry points on the lit surface, which we call the collector area. At rendering time for each pixel we determine the collector area on the lit cloud surface for different sets of scattering orders, then we infer the associated light transport. The fast computation of the collector area and light transport is possible thanks to a preliminary analysis of multiple scattering in planeparallel slabs and does not require slicing or marching through the volume. Rendering is done efficiently in a shader on the GPU, relying on a cloud surface mesh augmented with a Hypertexture to enrich the shape and silhouette. We demonstrate our model with the interactive rendering of detailed animated cumulus and cloudy sky at 2-10 frames per second. |

|

Crassin, Cyril ; Neyret, Fabrice ; Lefebvre, Sylvain INRIA Technical Report 2008. Technical Report @techreport{CNL08,

title = {Interactive GigaVoxels}, author = {Crassin, Cyril and Neyret, Fabrice and Lefebvre, Sylvain}, url = {http://maverick.inria.fr/Publications/2008/CNL08, INRIA Webpage http://maverick.inria.fr/Publications/2008/CNL08/RR-6567.pdf, Technical Report http://maverick.inria.fr/Publications/2008/CNL08/PresGigaVoxels.ppt, Presentation PPT}, year = {2008}, date = {2008-01-01}, institution = {INRIA Technical Report}, abstract = {We propose a new approach for the interactive rendering of large highly detailed scenes. It is based on a new representation and algorithm for large and detailed volume data, especially well suited to cases where detail is concentrated at the interface between free space and clusters of density. This is for instance the case with cloudy sky, landscape, as well as data currently represented as hypertextures or volumetric textures. Existing approaches do not efficiently store, manage and render such data, especially at high resolution and over large extents. Our method is based on a dynamic generalized octree with MIP-mapped 3D texture bricks in its leaves. Data is stored only for visible regions at the current viewpoint, at the appropriate resolution. Since our target scenes contain many sparse opaque clusters, this maintains low memory and bandwidth consumption during exploration. Ray-marching allows to quickly stops when reaching opaque regions. Also, we efficiently skip areas of constant density. A key originality of our algorithm is that it directly relies on the ray-marcher to detect missing data. The march along every ray in every pixel may be interrupted while data is generated or loaded. It hence achieves interactive performance on very large volume data sets. Both our data structure and algorithm are well-fitted to modern GPUs.}, keywords = {}, pubstate = {published}, tppubtype = {techreport} } We propose a new approach for the interactive rendering of large highly detailed scenes. It is based on a new representation and algorithm for large and detailed volume data, especially well suited to cases where detail is concentrated at the interface between free space and clusters of density. This is for instance the case with cloudy sky, landscape, as well as data currently represented as hypertextures or volumetric textures. Existing approaches do not efficiently store, manage and render such data, especially at high resolution and over large extents.

Our method is based on a dynamic generalized octree with MIP-mapped 3D texture bricks in its leaves. Data is stored only for visible regions at the current viewpoint, at the appropriate resolution. Since our target scenes contain many sparse opaque clusters, this maintains low memory and bandwidth consumption during exploration. Ray-marching allows to quickly stops when reaching opaque regions. Also, we efficiently skip areas of constant density. A key originality of our algorithm is that it directly relies on the ray-marcher to detect missing data. The march along every ray in every pixel may be interrupted while data is generated or loaded. It hence achieves interactive performance on very large volume data sets. Both our data structure and algorithm are well-fitted to modern GPUs. |

2007 |

|

|

Rendu Interactif De Nuages Realistes Bouthors, Antoine ; Neyret, Fabrice ; Max, Nelson ; Bruneton, Eric ; Crassin, Cyril AFIG '07 (Actes des 20emes journees de l'AFIG), pp. 183-195, AFIG, Marne la Vall'ee, France, 2007. Inproceedings @inproceedings{BNMBC07,

title = {Rendu Interactif De Nuages Realistes}, author = {Bouthors, Antoine and Neyret, Fabrice and Max, Nelson and Bruneton, Eric and Crassin, Cyril}, url = {http://artis.imag.fr/Publications/2007/BNMBC07, INRIA Webpage http://maverick.inria.fr/Publications/2007/BNMBC07/clouds.pdf, Paper}, year = {2007}, date = {2007-02-01}, booktitle = {AFIG '07 (Actes des 20emes journees de l'AFIG)}, pages = {183-195}, publisher = {AFIG}, address = {Marne la Vall'ee, France}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } |

|

Crassin, Cyril M2 Recherche UJF/INPG, INRIA, 2007. Masters Thesis @mastersthesis{Cra07,

title = {Représentation et Algorithmes pour l'Exploration Interactive de Volumes Procéduraux Étendus et Détaillés}, author = {Crassin, Cyril}, url = {http://maverick.inria.fr/Publications/2007/CN07/, INRIA Publication Page http://maverick.inria.fr/Publications/2007/CN07/RapportINRIA.pdf, Master Thesis}, year = {2007}, date = {2007-01-01}, school = {M2 Recherche UJF/INPG, INRIA}, abstract = {Les scènes naturelles sont souvent a la fois très riches en détails et spatialement vastes. Dans ce projet, on s’intéresse notamment a des données volumiques de type nuage, avalanche, écume. L’industrie des effets spéciaux s’appuie sur des solutions logicielles de rendu de gros volumes de voxels, qui ont permis de très beau résultats, mais a très fort coût en temps de calcul et en mémoire. Réciproquement, la puissance des cartes graphiques programmable (GPU) entraine une convergence entre le domaine du temps réel et du rendu réaliste, cependant la mémoire limitée des cartes fait que les données volumiques représentables en temps réel restent faibles (512 3 est un maximum). Proposer des structures et algorithmes adaptées au GPU permettant un réel passage a l’échelle, et ainsi, de traiter interactivement ce qui demande actuellement des heures de calcul, est le défi que ce projet cherche a relever.}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } Les scènes naturelles sont souvent a la fois très riches en détails et spatialement vastes. Dans ce projet, on s’intéresse notamment a des données volumiques de type nuage, avalanche, écume. L’industrie des effets spéciaux s’appuie sur des solutions logicielles de rendu de gros volumes de voxels, qui ont permis de très beau résultats, mais a très fort coût en temps de calcul et en mémoire.

Réciproquement, la puissance des cartes graphiques programmable (GPU) entraine une convergence entre le domaine du temps réel et du rendu réaliste, cependant la mémoire limitée des cartes fait que les données volumiques représentables en temps réel restent faibles (512 3 est un maximum). Proposer des structures et algorithmes adaptées au GPU permettant un réel passage a l’échelle, et ainsi, de traiter interactivement ce qui demande actuellement des heures de calcul, est le défi que ce projet cherche a relever. |