Day 1: Sunday August 2

I was at High Performance Graphics on Sunday, HPG is the merge of two previous conferences: Graphics Hradware and Interactive Ray Tracing. There was a lot of interesting things, especially on low level GPU things and the evolution of real-time graphics with ray-tracing based algorithms.

In particular, there was an “Hot 3D” panel where NVIDIA Austin Robinson gived more information on NVIRT now called OptiX, with very interesting implementation details. In particular, Austin explained a little bit how each computation steps of rays are scheduled on the GPU (in particular in case of recursive operations), using persistent threads, launched once to maximize MP occupancy, and used as a state machine switching between computation steps. More info on this on Tuesday at Siggraph. In the same session, James McCombe of Caustic Graphics presented their own real-time RT API, that seems quite similar to NVIDIA solution, and Larry Seiller from Intel presented RT on Larrabee. About Larrabee, it appears more and more to me that Larrabee wont be able to compete with GPU for rasterisation application, but will really be a ray-tracing killer platform.

In the evening, there was the social event of the conference on steamboat on the mississipi, very nice 🙂

Day 2: Monday August 3

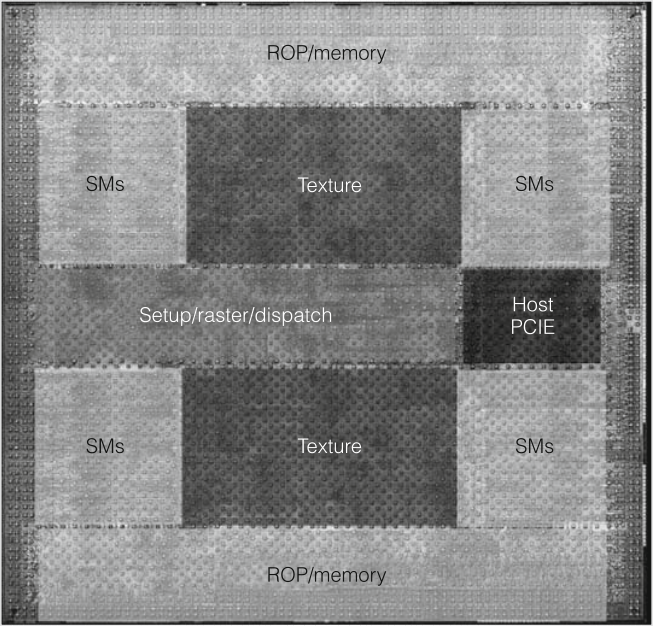

9:30: Still at HPG, the first talk is from Tim Sweeney, founder of Epic Games. Tim exposes its vision of the future of real-time graphics with the end of the dedicated GPUs as we know currently (with dedicated units), and their graphics API, replaced general computing devices, utilizing multi-core vector-processing units to run all code, graphics and non-graphics, uniformly 100% in software. This would be programmed directly in C++ and would allow a wide variety of new algorithms to be implemented, taking advantage of very good load balancing and memory latency hiding using large caches to provide high performances. In particular, Tim told about the usage REYES rendering pipeline in future video games, and the usage of ray-tracing for secondary rays effects. For Tim, while current video game engines development takes 3 years, next generation engines will need 5 years of development, due to the increasing complexity of these graphics engines.

9:45: Just get the news that OpenGL 3.2 specification has been announced by Khronos. Full spec can be downloaded there http://www.opengl.org/registry/ and as usual, NVIDIA announced the support in their driver http://developer.nvidia.com/object/opengl_3_driver.html. Main additions to the spec are:

- Increased performance for vertex arrays and fence sync

objects to avoid idling while waiting for resources shared between the

CPU and GPU, or multiple CPU threads;

- Improved pipeline programmability, including geometry shaders in the OpenGL core;

- Boosted cube map visual quality and multisampling rendering flexibility

by enabling shaders to directly process texture samples.

As well as new extensions: ARB_fragment_coord_convention, ARB_provoking_vertex, ARB_vertex_array_bgra, ARB_depth_clamp, WGL_ARB_create_context (updated to create profiles), GLX_ARB_create_context (updated to create profiles), GL_EXT_separate_shader_objects, GL_NV_parameter_buffer_object2, GL_NV_copy_image.txt.

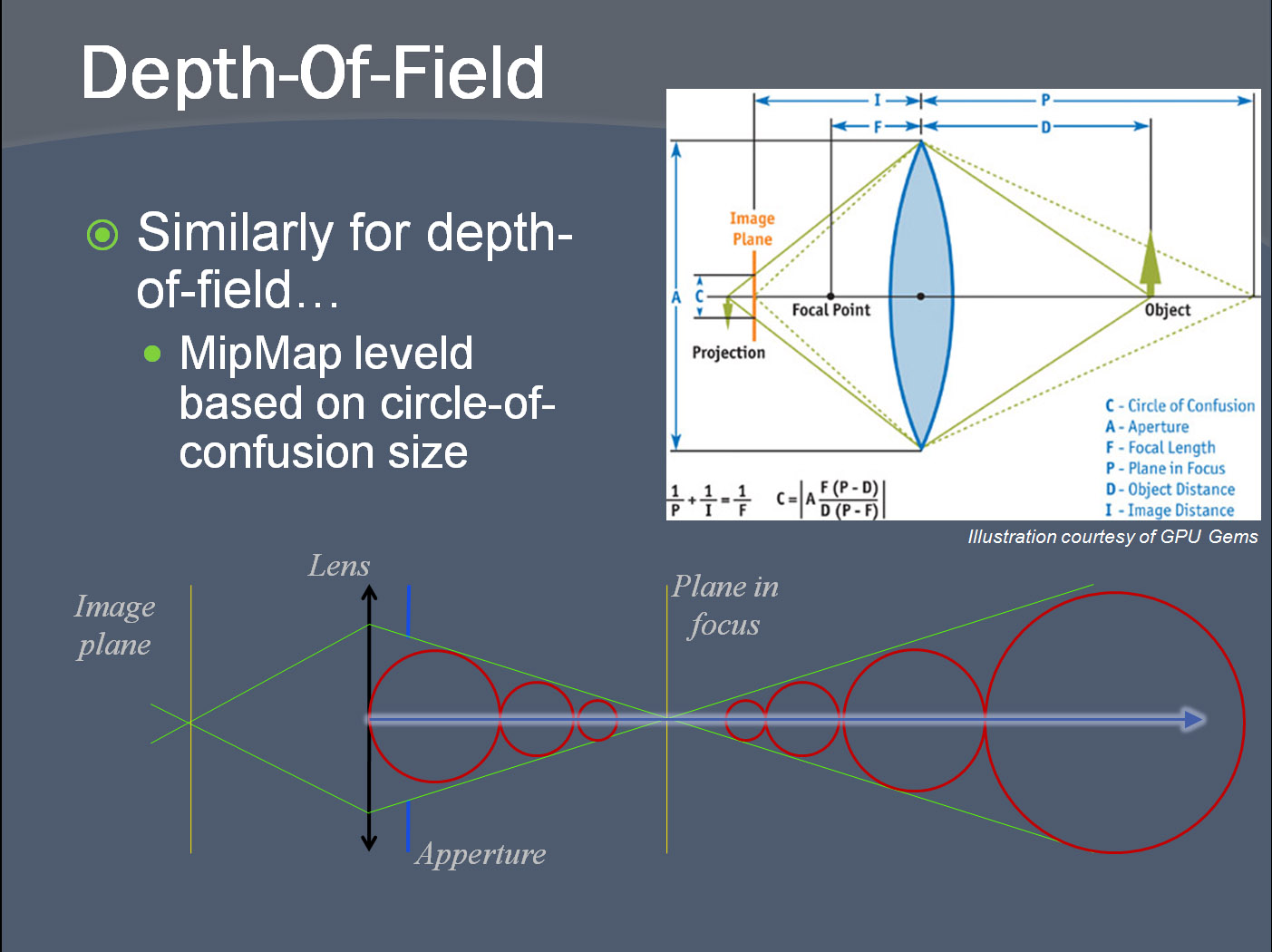

3:00pm: The first Siggraph session I followed partially was “Advances in Real-Time Rendering in 3D Graphics and Games” course. It seems that the most interesting things were the talk on Light Propagation Volumes in CryEngine 3 by Kaplanyan, were he exposed very good results they got using VPL (Virtual Point Lights) and Light Propagation Volumes. The Graphics Techniques From Disney’s Pure by Moore and Jeffries was also interesting as well as Making it Smooth: Advances in Antialiasing and Depth of Field Techniques by yang. It seems that the slides will be available there: www.bungie.net/publications in a few days.I didn’t see the talk on Graphics Engine Postmortem from LittleBigPlanet by Evans but I was said that it was also very interesting, especially to see how game developers make their own mixture from published techniques, and develop ad-hoc hacks that appears to be sufficient in most cases.

16:00: Final HPG panel : Tim Sweeney (Epic Games),

Larry Gritz (Sony Pictures Imageworks),

Steve Parker (NVIDIA), Aaron Lefohn (Intel), Vineet Goel (AMD).

Aeron told about it’s vision of future cross architecture rendering abstraction: Running mostly in user space and made of multiple specialized pipelines (REYES, RASTER, RT). Steve predicted the dead of rasterisation in 7 years as well as REYES and RT: Future graphics algorithms will be a combination of RT, rasterization and REYES (blend or unification), the question is how hardware manufacturer will fit into this market.

Day 3 : Tuesday August 4

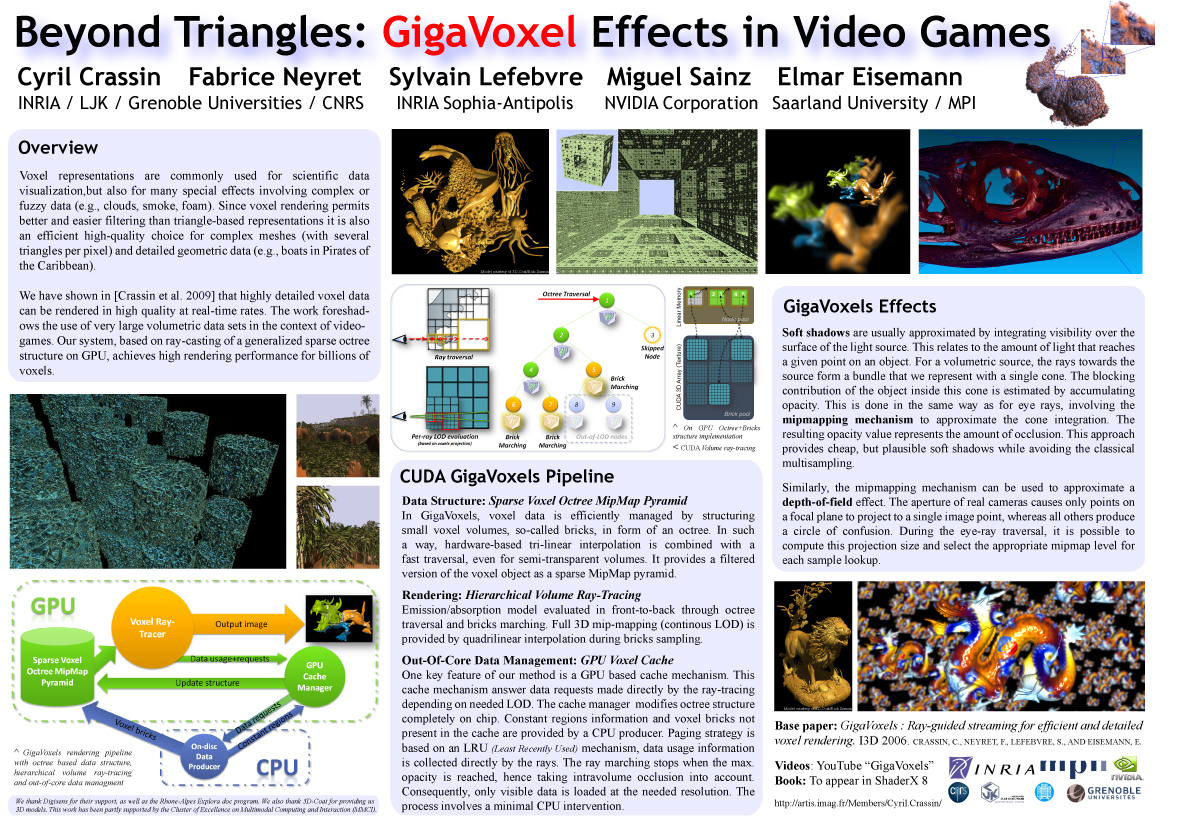

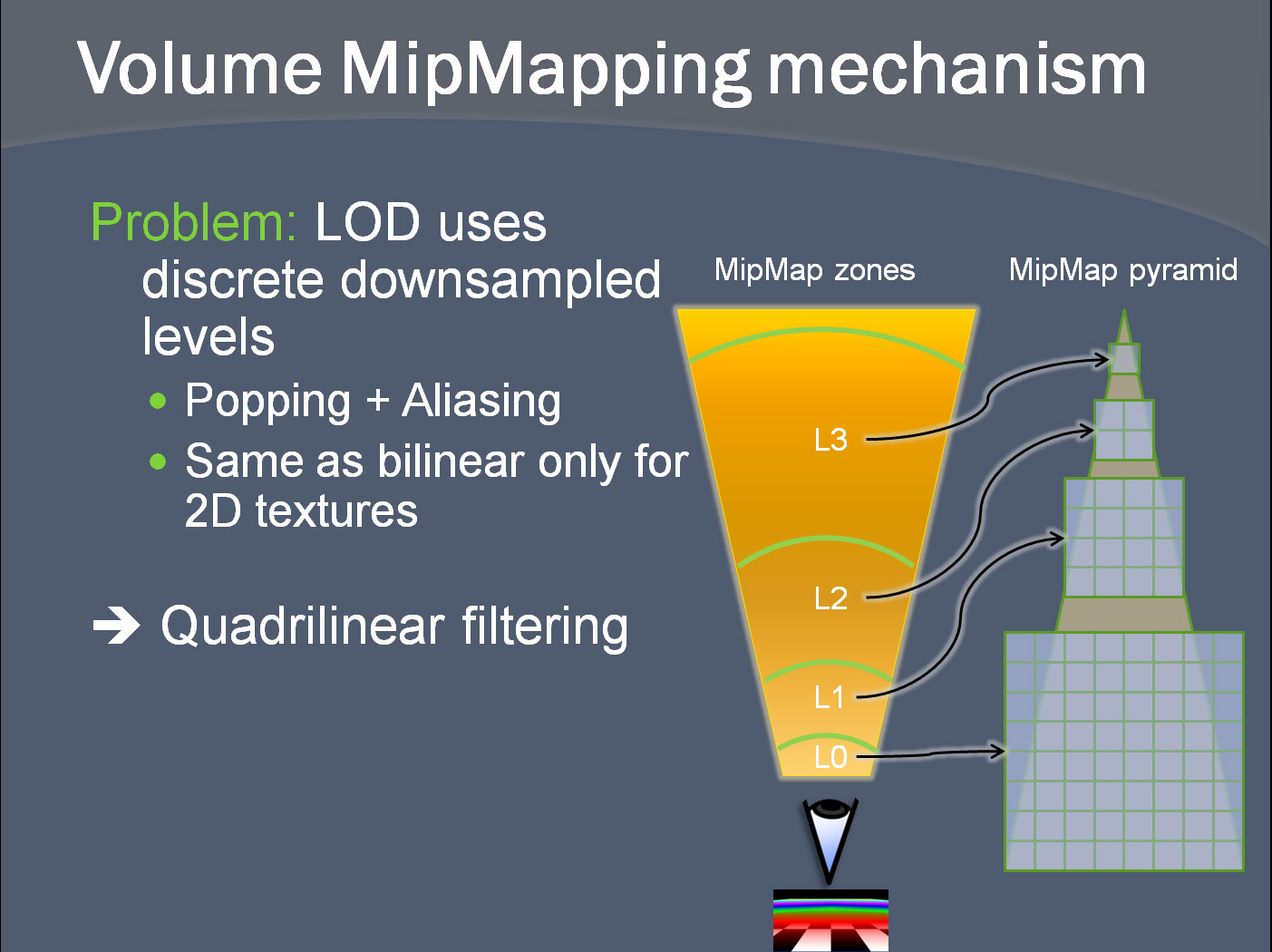

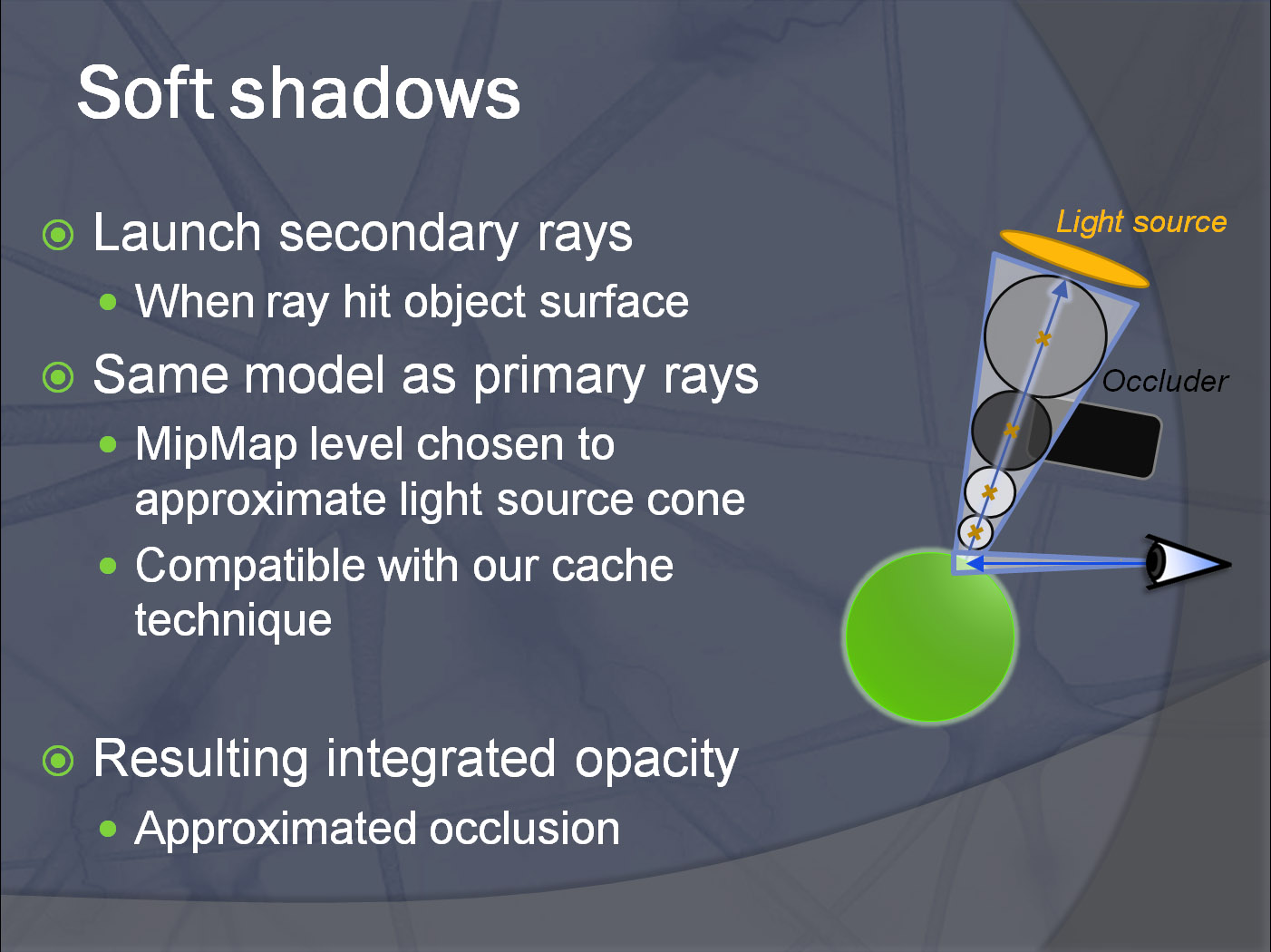

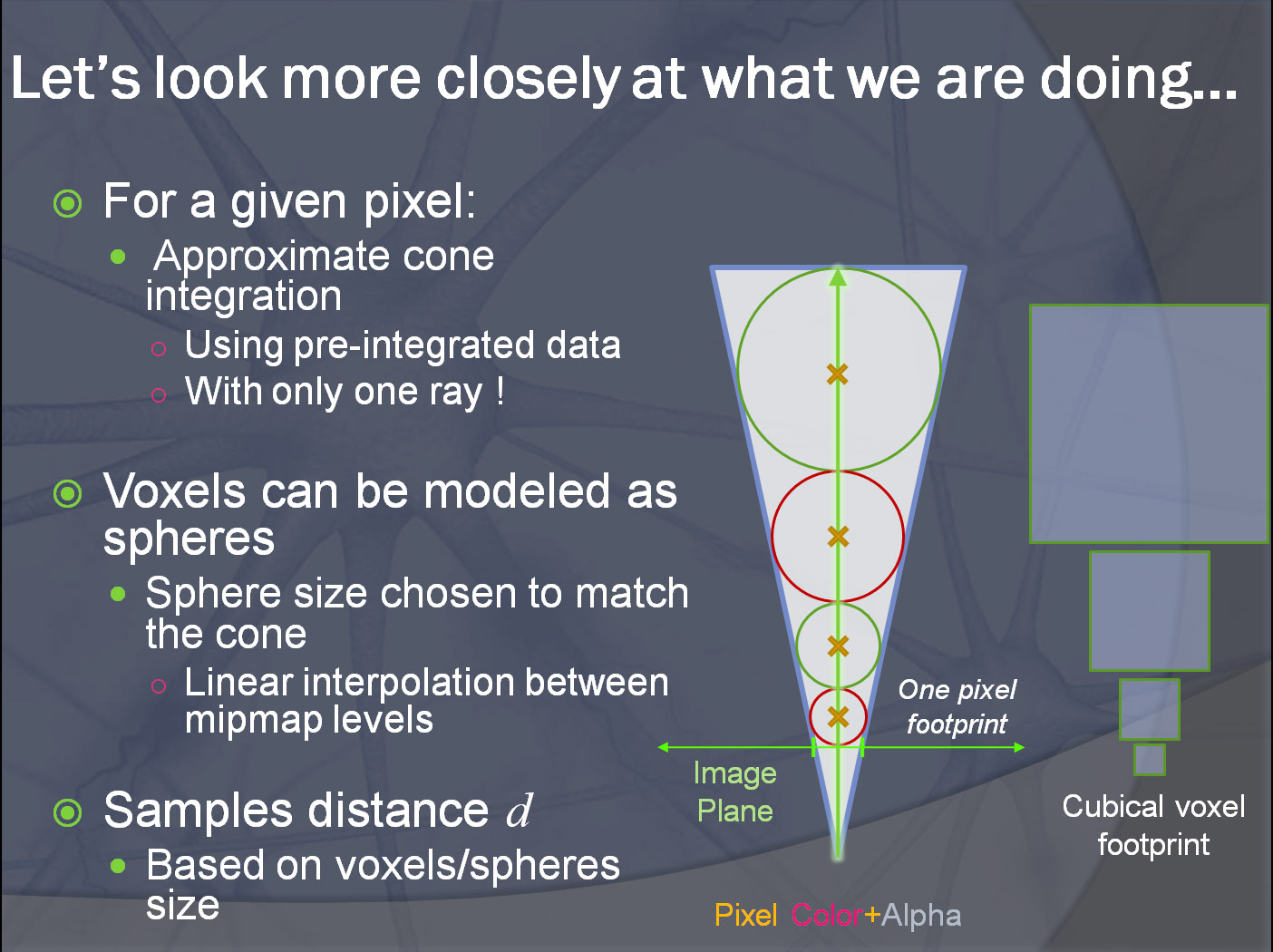

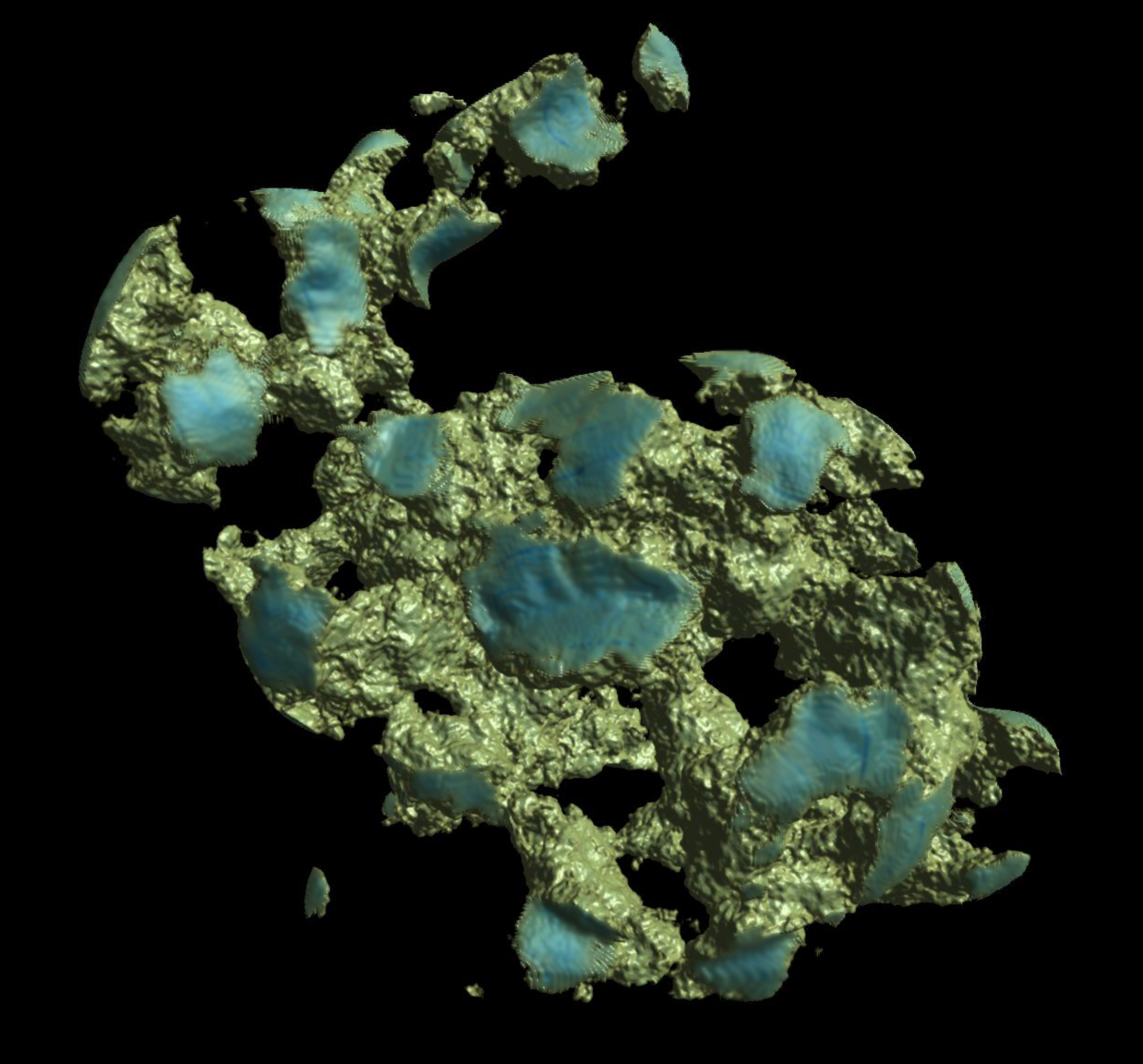

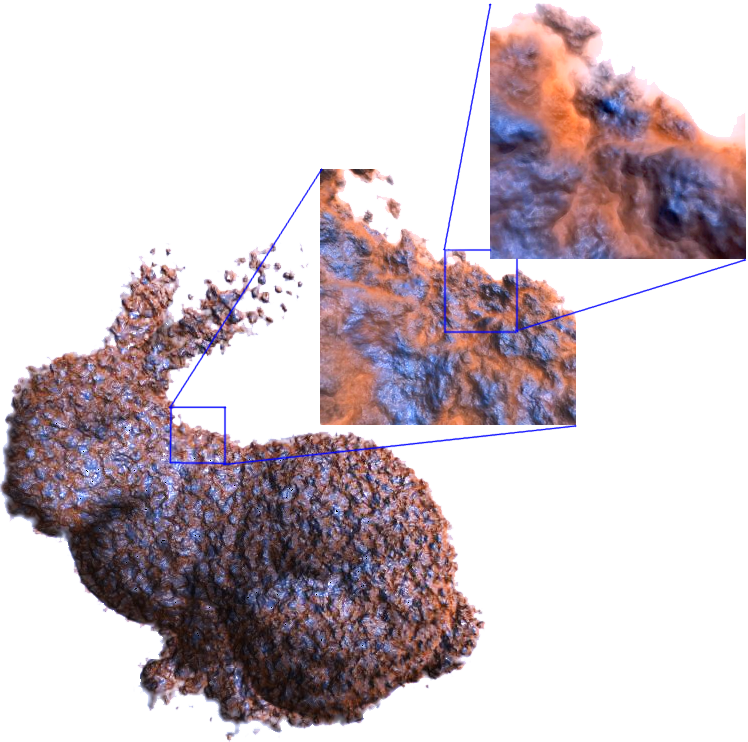

Not so many things today. In the morning, I went to the Real-Time Global Illumination for Dynamic Scenes course. It was quite interesting and provided a good synthesis of current state of the art techniques GI. I also presented my poster on GigaVoxels at the poster session during lunch break.

At the end of the day, an interesting session was the OpenCL Birth Of Feather. Mike Houston from ATI presented the OpenCL specification and the model and features proposed. Mike also explained how OpenCL will be implemented on ATI GPU, and in particular that due to the R7xx architecture,developers will need to vectorize their algorithm using vec4, like in shaders, to take advantage of their 5 components SIMD (composing each of the 16 cores also working in SIMD and composing their kind of multi-processors). He announced the release of an implementation of OpenCL from AMD, but only for CPU ! It’s seems they still don’t have GPU implementation,

and I wonder if they are waiting for the release of evergreen

architecture to provide an implementation. Next was Aaron Lefohn and Larry Seller who exposed future INTEL implementation. They particularly pushed the Task API of OpenCL, that seems to allow to implement efficiently task-parallel algorithms, with multiple kernels running concurrently and communicating. This kind of model suppose that different kernels can run on different cores of the GPU, on thing that is not possible with current NVIDIA architecture (neither for ATI one I think). If I well understood, it also seems that their first OpenCL implementation will require the programmer to use 16 components vectors to fill their SIMD lines. Finaly Simon Green exposed NVIDIA implementation, and as you know they are the only one to propose working OpenCL implementation now (and since December 2008).

Day 4: Wednesday August 5

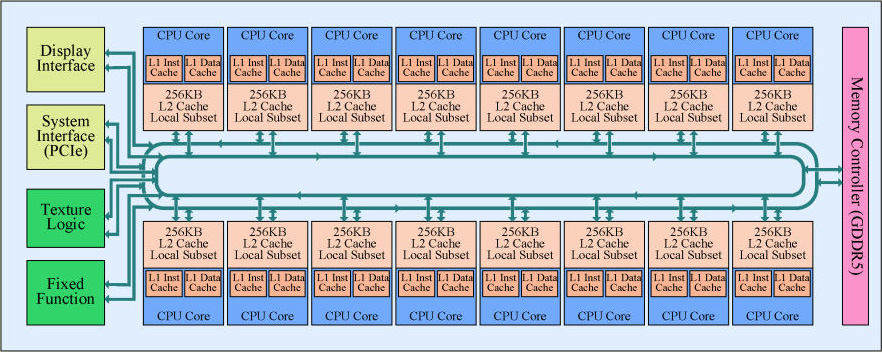

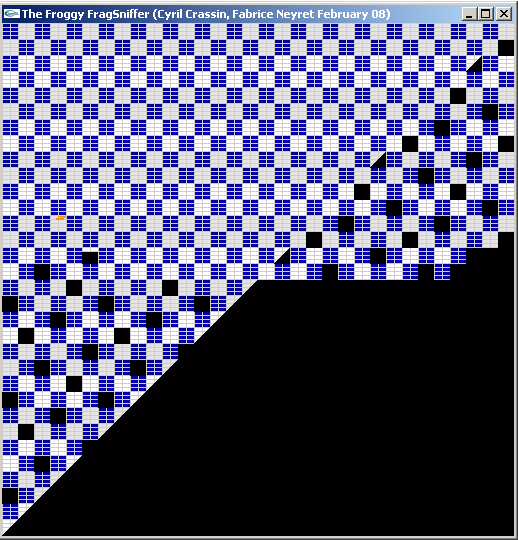

Interesting session from NVIDIA this afternoon: Alternative Rendering Pipelines on NVIDIA CUDA. Andrew Tatarinov and Alexander Kharlamov exposed their work on CUDA implementation of a Ray-Tracing pipeline as well as a REYES pipeline. These two implementations are using persistent threads to enhance work balancing and per-MP computation ressources usage with Uber-kernels using dynamic branching to switch between multiple tasks. In addition, the REYES implementation is using work queues, implemented using prefix-sum scan operations and used to fill persistant threads with work and to make them communicate. That’s for me a really awesome model, but for me the problem is the registers usage of such king of Uber-kernels. Ideally, a better threads scheduling on MPs and the ability to launch different threads on each MP should be even more efficient. Mode details there: http://developer.download.nvidia.com/presentations/2009/SIGGRAPH/3DVision_Develop_Design_Play_in_3D_Stereo.pdf

After many years using this website as a kind of blog, I finally decided to make a separate blog and I turned out to Blogger. Publishing things involved too much formatting effort and I became lazy posting here. In addition, I will keep this website to publish about my personal works and creations, while the blog will cover much wider topics. The intend of this new blog is to publish more regularly my thoughts and findings about GPUs, parallel programming, and computer graphics in general.

After many years using this website as a kind of blog, I finally decided to make a separate blog and I turned out to Blogger. Publishing things involved too much formatting effort and I became lazy posting here. In addition, I will keep this website to publish about my personal works and creations, while the blog will cover much wider topics. The intend of this new blog is to publish more regularly my thoughts and findings about GPUs, parallel programming, and computer graphics in general.

Timothy Farrar

Timothy Farrar  Just would like to give small tips I found to help working with CUDA under visual studio.

Just would like to give small tips I found to help working with CUDA under visual studio. Last week, intel gives two talks about Larrabee ISA called Larrabee New instructions (LRBNi).

Last week, intel gives two talks about Larrabee ISA called Larrabee New instructions (LRBNi).

I have been at Boston last few days to attend the I3D (ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games) conference where I presented our paper on

I have been at Boston last few days to attend the I3D (ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games) conference where I presented our paper on

NVIDIA just published a Beta version of CUDA 2.0 with associated SDK. Among other things, it brings Windows Vista support. Linux support should follow soon. The really good new for graphics programmers is that 3D texture access is now supported within the API 😀

NVIDIA just published a Beta version of CUDA 2.0 with associated SDK. Among other things, it brings Windows Vista support. Linux support should follow soon. The really good new for graphics programmers is that 3D texture access is now supported within the API 😀

After having released 2D hardware registers informations for their R5xx and R6xx GPUs a few months ago, AMD/ATI has just published the first bits of open-source 3D programming documentation for their R5xx GPUs (Radeon HD X1000 class cards). Even if R5xx are pretty old now, AMD plans to released 3D specifications for their R6xx (Radeon HD X2000 class cards) last architecture (supporting SM 4.0 and featuring unified shader architecture like the G80) very soon. This release is part of a large AMD plans to open up their whole hardware allowing the development of an open source driver fully supporting the last ATI chips (more infos

After having released 2D hardware registers informations for their R5xx and R6xx GPUs a few months ago, AMD/ATI has just published the first bits of open-source 3D programming documentation for their R5xx GPUs (Radeon HD X1000 class cards). Even if R5xx are pretty old now, AMD plans to released 3D specifications for their R6xx (Radeon HD X2000 class cards) last architecture (supporting SM 4.0 and featuring unified shader architecture like the G80) very soon. This release is part of a large AMD plans to open up their whole hardware allowing the development of an open source driver fully supporting the last ATI chips (more infos  Un an après la sortie du G80, NVidia viens enfin de publier une première Beta de son SDK Cg 2.0 apportant le support des Shaders Model 4.0. Un peu tard maintenant que je suis passé à GLSL… En plus, la version du compilateur ne semble même pas plus récente que celle de celui qui est inclus dans le driver pour compiler le GLSL (2.0.0.8 contre 2.0.1.8).

Un an après la sortie du G80, NVidia viens enfin de publier une première Beta de son SDK Cg 2.0 apportant le support des Shaders Model 4.0. Un peu tard maintenant que je suis passé à GLSL… En plus, la version du compilateur ne semble même pas plus récente que celle de celui qui est inclus dans le driver pour compiler le GLSL (2.0.0.8 contre 2.0.1.8).

Il y a presque un an maintenant, le groupe Khronos auquel venait d’être transféré le contrôle d’OpenGL dévoilait l’avenir vers lequel s’orientait notre API préférée (enfin la mienne en tout cas ;-): Noms de code “Longs Peak” et “Mt. Evans”, deux révisions prévues pour 2007 et qui constituent beaucoup plus qu’une simple évolution de l’API. C’est finalement il y a quelques semaines, au SIGGRAPH 2007, qu’a été officiellement présenté et baptisé “Longs Peak”:OpenGL 3.0.

Il y a presque un an maintenant, le groupe Khronos auquel venait d’être transféré le contrôle d’OpenGL dévoilait l’avenir vers lequel s’orientait notre API préférée (enfin la mienne en tout cas ;-): Noms de code “Longs Peak” et “Mt. Evans”, deux révisions prévues pour 2007 et qui constituent beaucoup plus qu’une simple évolution de l’API. C’est finalement il y a quelques semaines, au SIGGRAPH 2007, qu’a été officiellement présenté et baptisé “Longs Peak”:OpenGL 3.0.